使用深度学习进行图像分类

从 Kaggle 获取猫狗二分类数据。数据集包含 25,000 张猫和狗的图片。

·

从 Kaggle 获取猫狗二分类数据。数据集包含 25,000 张猫和狗的图片。

https://www.kaggle.com/c/dogs-vs-cats/data

from glob import glob

import os

import numpy as np

import torch

import torchvision.transforms as transforms

from torchvision.datasets import ImageFolder

import matplotlib.pyplot as plt

import torchvision.models as models

import torch.nn as nn

import torch.optim as optim

import torch.optim.lr_scheduler as lr_scheduler

import time

from torch.autograd import Variable

0.数据预处理

path = './Data/dogsandcats/train'

# 读取文件夹内的所有文件

files = glob(os.path.join(path, '*.jpg'))

print(f'Total no of images {len(files)}')

no_of_images = len(files)

# 创建可用于创建验证数据集的混合索引

shuffle = np.random.permutation(no_of_images)

# 创建保存验证图片集的validation目录

os.mkdir(os.path.join(path, 'valid'))

os.mkdir(os.path.join(path, 'train'))

# 使用标签名称创建目录

for t in ['train', 'valid']:

for folder in ['dog/', 'cat/']:

os.mkdir(os.path.join(path, t, folder))

# 将图片的一小部分子集复制到valid文件夹

for i in shuffle[:2000]:

folder = files[i].split('\\')[-1].split('.')[0]

image = files[i].split('\\')[-1]

os.rename(files[i], os.path.join(path,'valid',folder,image))

# 将图片的一小部分子集复制到train文件夹

for i in shuffle[2000:]:

folder = files[i].split('\\')[-1].split('.')[0]

image = files[i].split('\\')[-1]

os.rename(files[i], os.path.join(path,'train',folder,image))

Total no of images 0

1.把数据加载到Pytorch张量

simple_transform = transforms.Compose([transforms.Resize((224,224)),

transforms.ToTensor(),

transforms.Normalize([0.485,0.456,0.406], [0.229,0.224,0.225])])

train = ImageFolder('./Data/dogsandcats/train/', simple_transform)

valid = ImageFolder('./Data/dogsandcats/valid/', simple_transform)

def imshow(inp):

"""Imshow for Tensor."""

inp = inp.numpy().transpose((1,2,0))

mean = np.array([0.485,0.456,0.406])

std = np.array([0.229,0.224,0.225])

inp = std * inp + mean

inp = np.clip(inp, 0, 1)

plt.imshow(inp)

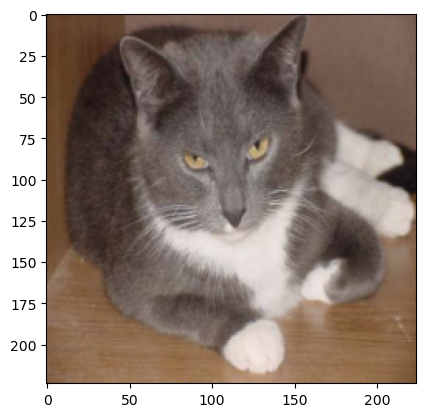

利用 imshow 函数可视化图片

imshow(train[50][0])

2.按批加载PyTorch张量

train_data_gen = torch.utils.data.DataLoader(train, batch_size=64, num_workers=3)

valid_data_gen = torch.utils.data.DataLoader(valid, batch_size=2, num_workers=3)

dataloaders = {}

dataloaders['train'] = train_data_gen

dataloaders['valid'] = valid_data_gen

dataset_sizes = {}

dataset_sizes['train'] = len(train_data_gen)

dataset_sizes['valid'] = len(valid_data_gen)

3.构建网络架构

model_ft = models.resnet18(pretrained=True)

num_ftrs = model_ft.fc.in_features

model_ft.fc = nn.Linear(num_ftrs,2)

is_cuda = torch.cuda.is_available()

if is_cuda:

model_ft = model_ft.cuda()

print(model_ft)

ResNet(

(conv1): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(maxpool): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

(layer1): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(1): BasicBlock(

(conv1): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(64, 64, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer2): Sequential(

(0): BasicBlock(

(conv1): Conv2d(64, 128, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer3): Sequential(

(0): BasicBlock(

(conv1): Conv2d(128, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(layer4): Sequential(

(0): BasicBlock(

(conv1): Conv2d(256, 512, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(downsample): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2), bias=False)

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): BasicBlock(

(conv1): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(relu): ReLU(inplace=True)

(conv2): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), bias=False)

(bn2): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(avgpool): AdaptiveAvgPool2d(output_size=(1, 1))

(fc): Linear(in_features=512, out_features=2, bias=True)

)

4.训练模型

# 损失函数和优化器

learning_rate = 0.001

criterion = nn.CrossEntropyLoss()

optimizer_ft = optim.SGD(model_ft.parameters(), lr=0.001, momentum=0.9)

exp_lr_scheduler = lr_scheduler.StepLR(optimizer_ft, step_size=7, gamma=0.1)

训练函数

def train_model(model, criterion, optimizer, scheduler, num_epochs=25):

since = time.time()

best_model_wts = model.state_dict()

best_acc = 0.0

for epoch in range(num_epochs):

print('Epoch {}/{}'.format(epoch, num_epochs - 1))

print('-' * 10)

# 每轮都有训练和验证阶段

for phase in ['train', 'valid']:

if phase == 'train':

scheduler.step()

model.train(True) # 模型设为训练模式

else:

model.train(False) # 模型设为评估模式

running_loss = 0.0

running_corrects = 0

# 在数据上迭代

for data in dataloaders[phase]:

# 获取输入

inputs, labels = data

# 封装成变量

if is_cuda:

inputs = Variable(inputs.cuda())

labels = Variable(labels.cuda())

else:

inputs, labels = Variable(inputs), Variable(labels)

# 梯度参数清0

optimizer.zero_grad()

# 前向

outputs = model(inputs)

_, preds = torch.max(outputs.data, 1)

loss = criterion(outputs, labels)

# 只在训练阶段反向优化

if phase == 'train':

loss.backward()

optimizer.step()

# 统计

running_loss += loss.data

running_corrects += torch.sum(preds == labels.data)

epoch_loss = running_loss / dataset_sizes[phase]

epoch_acc = running_corrects / dataset_sizes[phase]

print('{} Loss: {:.4f} Acc: {:.4f}'.format(phase, epoch_loss, epoch_acc))

# 深度复制模型

if phase == 'valid' and epoch_acc > best_acc:

best_acc = epoch_acc

best_model_wts = model.state_dict()

print()

time_elapsed = time.time() - since

print('Training complete in {:.0f}m {:.0f}s'.format(time_elapsed // 60, time_elapsed % 60))

print('Best val Acc: {:4f}'.format(best_acc))

# 加载最优权重

model.load_state_dict(best_model_wts)

return model

5.训练过程

train_model(model_ft, criterion, optimizer_ft, exp_lr_scheduler)

更多推荐

已为社区贡献3条内容

已为社区贡献3条内容

所有评论(0)