pytorch实战1

1、Tensor的数据类型# torch.FloatTensor浮点型a = torch.FloatTensor(2,3)print(a)b = torch.FloatTensor([2,3,4,5])print(b)# torch.IntTensor整型a = torch.IntTensor(2,3)print(a)b = torch.IntTensor([2,3,4,5...

·

1、Tensor的数据类型

# torch.FloatTensor浮点型

a = torch.FloatTensor(2,3)

print(a)

b = torch.FloatTensor([2,3,4,5])

print(b)

# torch.IntTensor整型

a = torch.IntTensor(2,3)

print(a)

b = torch.IntTensor([2,3,4,5])

print(b)

# torch.rand生成数据类型为浮点型且维度指定,随机生成的浮点数据在0~1区间均匀分布

a = torch.rand(2,3)

print(a)

# torch.randn生成数据类型为浮点型且维度指定,随机生成的浮点数的取值满足均值为0,方差为1的正太分布

a = torch.randn(2,3)

print(a)

# torch.range生成数据类型为浮点型且自定义起始范围和结束范围

a = torch.arange(2,8,1)

print(a)

# torch.zeros

a = torch.zeros(2,3)

print(a)2、Tensor的运算

a = torch.randn(2,3)

print(a)

# 绝对值

a = torch.abs(a)

print(a)

b = torch.randn(2,3)

print(b)

# 加和

c = torch.add(a,b)

print(c)

a = torch.randn(2,3)

print(a)

# clamp规约

b = torch.clamp(a,-0.1,0.1)

print(b)

a = torch.IntTensor([1,2,3,4])

b = torch.IntTensor([1,2,3,4])

# 除法

c = torch.div(a,b)

print(c)

a = torch.IntTensor([[1,2],[3,4]])

b = torch.IntTensor([[1,2],[3,4]])

# 乘积

c = torch.mul(a,b)

print(c)

a = torch.IntTensor([2,3,4])

# 求幂

c = torch.pow(a,2)

print(c)

a = torch.randn(2,3)

b = torch.randn(3,2)

# 矩阵乘积

c = torch.mm(a,b)

print(c)

a = torch.IntTensor([[1,2,3],[4,5,6]])

b = torch.IntTensor([1,1,1])

# 矩阵与向量的乘法

c = torch.mv(a,b)

print(c)

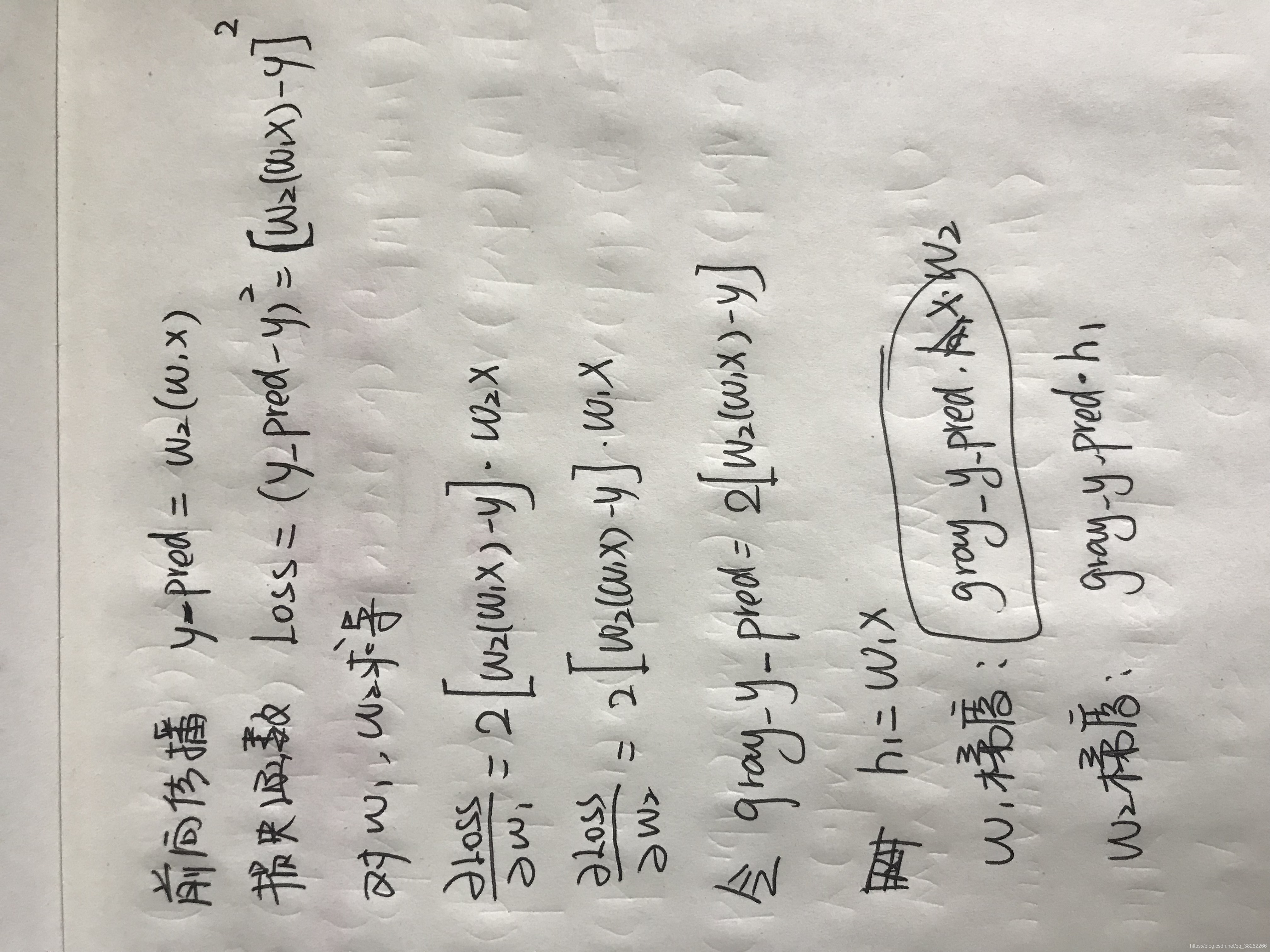

3、搭建一个简单的神经网络

# 一批次输入数据量

batch_n = 100

# 隐藏层结点数量

hidden_layers = 100

# 数据特征1000个

input_data = 1000

# 输出特征10个

output_data = 10

# 初始化权重 100*1000

x = torch.randn(batch_n,input_data)

# 100*10

y = torch.randn(batch_n,output_data)

# 1000*100

w1 = torch.randn(input_data,hidden_layers)

# 100*10

w2 = torch.randn(hidden_layers,output_data)

# 定义训练次数和学习率

epoch_n = 20

learning_rate = 1e-6

# 梯度下降优化神经网络的参数

for epoch in range(epoch_n):

# 正向传播

# 100*100

h1 = x.mm(w1)

h1 = h1.clamp(min=0)

# 100*10

y_pred = h1.mm(w2)

loss = (y_pred-y).pow(2).sum()

print('Epoch:{},Loss:{:.4f}'.format(epoch,loss))

gray_y_pred = 2*(y_pred-y)

# t()转置 mm()矩阵乘积 反向传播

# w2的梯度

gray_w2 = h1.t().mm(gray_y_pred)

grad_h = gray_y_pred.clone()

grad_h = grad_h.mm(w2.t())

grad_h.clamp(min=0)

# w1的梯度

gray_w1 = x.t().mm(grad_h)

w1 -= learning_rate * gray_w1

w2 -= learning_rate * gray_w2

4、利用自动求梯度机制搭建神经网络

import torch

from torch.autograd import Variable

# 批量输入的数据量

batch_n = 100

# 通过隐藏层后输出的特征数

hidden_layer = 100

# 输入数据的特征个数

input_data = 1000

# 最后输出的分类结果数

output_data = 10

# 输入

x = Variable(torch.randn(batch_n,input_data),requires_grad = False)

# 输出

y = Variable(torch.randn(batch_n,output_data),requires_grad = False)

# 参数

w1 = Variable(torch.randn(input_data,hidden_layer),requires_grad = True)

w2 = Variable(torch.randn(hidden_layer,output_data),requires_grad = True)

epoch_n = 20

learning_rate = 1e-6

for epoch in range(epoch_n):

y_pred = x.mm(w1).clamp(min=0).mm(w2)

loss = (y_pred-y).pow(2).sum()

print("Epoch:{} , Loss:{:.4f}".format(epoch, loss.data))

loss.backward()

w1.data -= learning_rate * w1.grad.data

w2.data -= learning_rate * w2.grad.data

# 如果不置零,则计算的梯度值会被一直累加,这样就会影响到后续的计算

w1.grad.data.zero_()

w2.grad.data.zero_()5、自定义传播函数

import torch

from torch.autograd import Variable

# 批量输入的数据量

batch_n = 100

# 通过隐藏层后输出的特征数

hidden_layer = 100

# 输入数据的特征个数

input_data = 1000

# 最后输出的分类结果数

output_data = 10

# 构建一个继承了torch.nn.Module的新类,来完成对前向传播函数和后向传播函数的重写

class Model(torch.nn.Module):

def __init__(self):

super(Model,self).__init__()

# 重写forward函数

def forward(self,input,w1,w2):

x = torch.mm(input,w1)

x = torch.clamp(x,min=0)

x = torch.mm(x,w2)

return x

# 重写backward函数

def backward(self):

pass

# 输入

x = Variable(torch.randn(batch_n,input_data),requires_grad = False)

# 输出

y = Variable(torch.randn(batch_n,output_data),requires_grad = False)

# 参数

w1 = Variable(torch.randn(input_data,hidden_layer),requires_grad = True)

w2 = Variable(torch.randn(hidden_layer,output_data),requires_grad = True)

epoch_n = 20

learning_rate = 1e-6

model = Model()

for epoch in range(epoch_n):

y_pred = model.forward(x,w1,w2)

loss = (y_pred-y).pow(2).sum()

print("Epoch:{} , Loss:{:.4f}".format(epoch, loss.data))

loss.backward()

w1.data -= learning_rate * w1.grad.data

w2.data -= learning_rate * w2.grad.data

# 如果不置零,则计算的梯度值会被一直累加,这样就会影响到后续的计算

w1.grad.data.zero_()

w2.grad.data.zero_()

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)