pytorch使用tensorboardX做可视化(二)特征图可视化

因为要对网络里的layer操作,所以网络层命名发生了一些改变LeNet5.pyimport torch.nn as nnimport numpy as npimport torchfrom collections import OrderedDict#定义lenet5class LeNet5(nn.Module):def __init__(self, num_clases=10):super(Le

·

因为要对网络里的layer操作,所以网络层命名发生了一些改变

LeNet5.py

import torch.nn as nn

import numpy as np

import torch

from collections import OrderedDict

#定义lenet5

class LeNet5(nn.Module):

def __init__(self, num_clases=10):

super(LeNet5, self).__init__()

self.c1 = nn.Sequential(OrderedDict([

('conv1',nn.Conv2d(1, 6, kernel_size=5, stride=1, padding=2)),

('batch',nn.BatchNorm2d(6)),

('relu',nn.ReLU()),

('maxpool',nn.MaxPool2d(kernel_size=2, stride=2))

]))

self.c2 = nn.Sequential(OrderedDict([

('conv2',nn.Conv2d(6, 16, kernel_size=5)),

('batch',nn.BatchNorm2d(16)),

('relu',nn.ReLU()),

('maxpool',nn.MaxPool2d(kernel_size=2, stride=2))

]))

self.c3 = nn.Sequential(OrderedDict([

('conv1', nn.Conv2d(16, 120, kernel_size=5)),

('batch', nn.BatchNorm2d(120)),

('relu', nn.ReLU())

]))

self.f1 = nn.Sequential(

nn.Linear(120, 84),

nn.ReLU()

)

self.f2 = nn.Sequential(

nn.Linear(84, num_clases),

nn.LogSoftmax()

)

def forward(self, x):

out = self.c1(x)

out = self.c2(out)

out = self.c3(out)

out = out.reshape(out.size(0), -1)

out = self.f1(out)

out = self.f2(out)

return out

def train():

#准备数据

import torchvision

import torchvision.transforms as transforms

import torch.optim as optim

mnist_train = torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST',

train=True, download=True, transform=transforms.ToTensor())

mnist_iter = torch.utils.data.DataLoader(mnist_train,64,shuffle = True)

# 训练整个网络

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

total_step = len(mnist_train)

curr_lr = 0.1

model = LeNet5(10)

optimizer = optim.SGD(model.parameters(), lr=curr_lr)

num_epoches = 1

loss_ = torch.nn.CrossEntropyLoss()

#--------------------- tensorboard ---------------#

loss_show = []

#--------------------- tensorboard ---------------#

for epoch in range(num_epoches):

for i, (images, labels) in enumerate(mnist_iter):

images = images.to(device)

labels = labels.to(device)

# 正向传播

outputs = model(images)

loss = loss_(outputs, labels)

# --------------------- tensorboard ---------------#

loss_show.append(loss)

# --------------------- tensorboard ---------------#

# 反向传播

optimizer.zero_grad()

loss.backward()

optimizer.step()

if (i + 1) % 100 == 0:

print(f'Epoch {epoch + 1}/{num_epoches}, Step {i + 1}/{total_step}, {loss.item()}') # 不要忘了item()

if i == 300:

break

#--------------------- tensorboard ---------------#

import tensorboardutil as tb

tb.show(model,loss_show)

#--------------------- tensorboard ---------------#

torch.save(model.state_dict(), 'ResnetCifar10.pt')

if __name__ == '__main__':

train()show.py

from tensorboardX import SummaryWriter

import torchvision.utils as vutils

# 定义Summary_Writer

writer = SummaryWriter('./Result') # 数据存放在这个文件夹

def show(model,loss):

# 显示每个layer的权重

print(model)

for i, (name, param) in enumerate(model.named_parameters()):

if 'bn' not in name:

writer.add_histogram(name, param, 0)

writer.add_scalar('loss', loss[i], i)

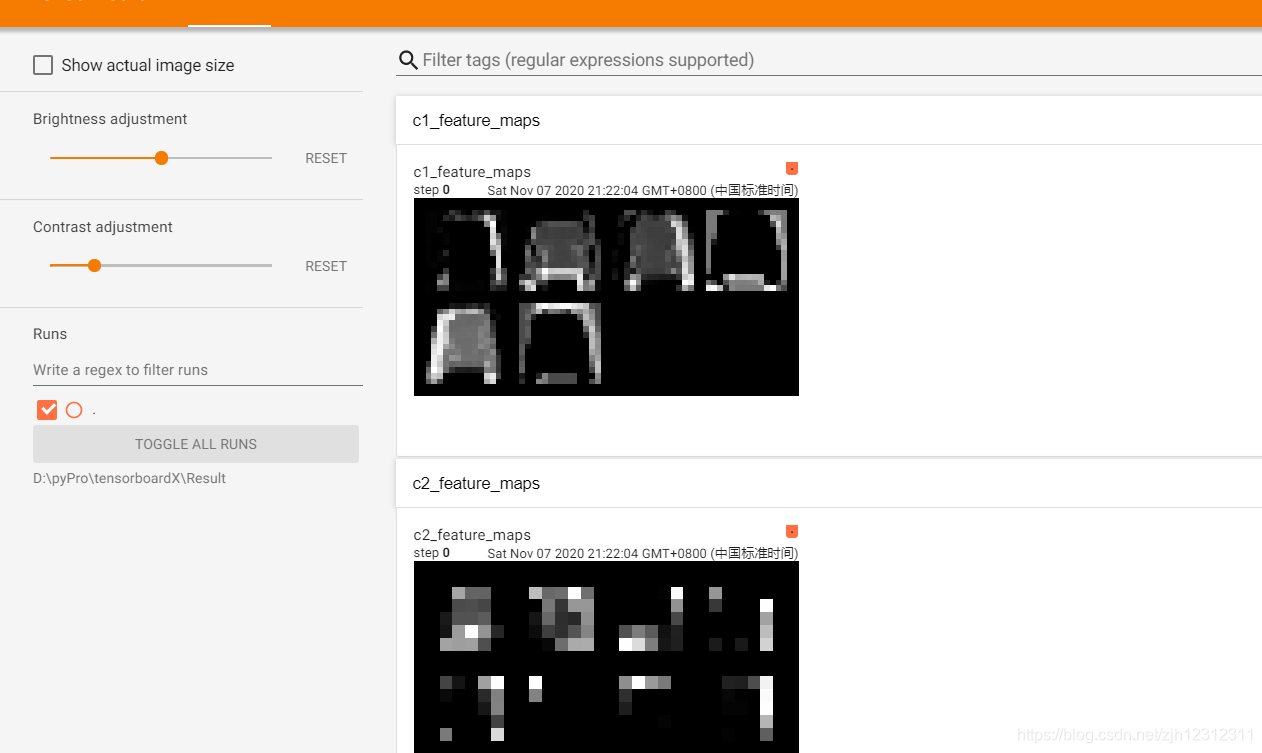

# 卷积可视化

def show_featuremap(model,image):

# 定义网格

img_grid = vutils.make_grid(image, normalize=True, scale_each=True, nrow=2)

# 绘制原始图像

writer.add_image('raw img', img_grid) # j 表示feature map数

model.eval()

for name, layer in model._modules.items():

print(name,layer)

if not ('c' in name):

return

image = layer(image)

if 'c' in name:

x1 = image.transpose(0, 1) # C,B, H, W ---> B,C, H, W

img_grid = vutils.make_grid(x1, normalize=True, scale_each=True, nrow=4) # normalize进行归一化处理

writer.add_image(f'{name}_feature_maps', img_grid, global_step=0)

# for name, layer in model.named_parameters():

# print(name)

# if 'conv' in name:

# print(name)

# x1 = image.transpose(0, 1) # C,B, H, W ---> B,C, H, W

# img_grid = vutils.make_grid(x1, normalize=True, scale_each=True, nrow=4) # normalize进行归一化处理

# writer.add_image(f'{name}_feature_maps', img_grid, global_step=0)

if __name__ == '__main__':

import torch

import LeNet5

model = LeNet5.LeNet5(10)

model.load_state_dict(torch.load('ResnetCifar10.pt'))

import torchvision

import torchvision.transforms as transforms

mnist_train = torchvision.datasets.FashionMNIST(root='~/Datasets/FashionMNIST',

train=True, download=True, transform=transforms.ToTensor())

mnist_iter = torch.utils.data.DataLoader(mnist_train, 1, shuffle=True)

for i, (images, labels) in enumerate(mnist_iter):

show_featuremap(model, images)

break结果:

更多推荐

已为社区贡献4条内容

已为社区贡献4条内容

所有评论(0)