智能代码生成系统深度剖析:从Copilot到Devin的AI编程范式革命

AI代码生成技术正经历从辅助工具到编程搭档的转型。文章系统梳理了三代技术演进:1)基于Transformer的代码补全(如Codex);2)支持结构化理解和长上下文的生成(如StarCoder2);3)多智能体协作编程系统(如Devin)。重点剖析了智能体系统的架构设计,包括分层规划、工具调用和安全沙箱等关键技术。同时探讨了语法约束解码、执行反馈学习等核心挑战解决方案,以及企业级系统的多模型路由、

一、引言:当AI成为编程搭档

2024年3月,Cognition AI发布的Devin在SWE-bench基准测试中创下13.86%的解决率纪录,标志着AI编程从"代码补全"迈向"端到端软件开发"的新纪元。与此同时,GitHub Copilot已拥有超过130万付费订阅用户,AI生成的代码占比在某些项目中超过40%。

这场静默的革命正在重塑软件工程的工作流:从需求分析、架构设计到编码实现、测试调试,AI的触角已延伸至开发全生命周期。本文将深入解析智能代码生成系统的技术栈,揭示从简单的Transformer补全到多智能体协作编程的架构演进。

二、代码生成技术的三代范式

2.1 第一代:序列到序列的代码补全

代表模型:Codex (2021)、CodeParrot

核心思想:将代码生成视为文本到文本的翻译任务,使用标准Transformer Decoder进行自回归生成。

import torch

import torch.nn as nn

from transformers import GPT2LMHeadModel, GPT2Tokenizer

class CodeCompletionModel:

def __init__(self, model_path="microsoft/CodeGPT-small-py"):

self.tokenizer = GPT2Tokenizer.from_pretrained(model_path)

self.model = GPT2LMHeadModel.from_pretrained(model_path)

self.max_context = 2048

def complete(self, prefix_code, temperature=0.2, max_length=128):

"""

基于前缀代码生成后续代码

prefix_code: 已编写的代码前缀

"""

# 编码输入

inputs = self.tokenizer(

prefix_code,

return_tensors="pt",

truncation=True,

max_length=self.max_context

)

# 生成参数调优(代码生成需要低温度保证确定性)

with torch.no_grad():

outputs = self.model.generate(

inputs.input_ids,

max_length=len(inputs.input_ids[0]) + max_length,

temperature=temperature,

top_p=0.95,

repetition_penalty=1.2, # 抑制重复代码

pad_token_id=self.tokenizer.eos_token_id,

eos_token_id=self.tokenizer.encode('\n\n')[0] # 以空行结束

)

# 解码并提取新生成部分

full_code = self.tokenizer.decode(outputs[0], skip_special_tokens=True)

new_code = full_code[len(prefix_code):]

return new_code

# 使用示例

model = CodeCompletionModel()

prefix = """

def quicksort(arr):

if len(arr) <= 1:

return arr

pivot = arr[len(arr) // 2]

"""

completion = model.complete(prefix)

print(completion)局限性:

-

仅支持局部补全,无法理解跨文件上下文

-

缺乏语法约束,可能生成编译错误的代码

-

无法执行和验证代码正确性

2.2 第二代:结构化代码理解与生成

代表模型:CodeT5、UniXcoder、CodeLlama、StarCoder2

核心创新:

-

双模态编码:同时学习代码的文本形式(Token序列)和结构形式(AST抽象语法树)

-

填充目标(FIM - Fill-In-the-Middle):支持代码中间插入,而非仅后缀生成

-

长上下文建模:支持16K-100K token的代码库级理解

FIM(Fill-In-the-Middle)技术详解:

class FIMCodeModel:

"""

FIM训练目标:<PRE>前缀<SUF>后缀<MID>中间填充

传统自回归:prefix -> suffix (只能从左到右)

FIM模式:prefix + suffix -> middle (双向上下文)

"""

def __init__(self, model_name="bigcode/starcoder2-7b"):

self.tokenizer = AutoTokenizer.from_pretrained(model_name)

self.model = AutoModelForCausalLM.from_pretrained(model_name)

# FIM特殊token

self.fim_prefix = "<fim_prefix>"

self.fim_suffix = "<fim_suffix>"

self.fim_middle = "<fim_middle>"

def infill(self, prefix, suffix, max_tokens=256):

# 构造FIM格式输入

prompt = f"{self.fim_prefix}{prefix}{self.fim_suffix}{suffix}{self.fim_middle}"

inputs = self.tokenizer(prompt, return_tensors="pt")

with torch.no_grad():

outputs = self.model.generate(

inputs.input_ids,

max_new_tokens=max_tokens,

temperature=0.2,

do_sample=True,

pad_token_id=self.tokenizer.eos_token_id

)

# 提取生成的中间部分

generated = self.tokenizer.decode(outputs[0][inputs.input_ids.shape[1]:], skip_special_tokens=True)

# 组合完整代码

return prefix + generated + suffix

def build_code_graph(self, repo_path):

"""

构建代码知识图谱,支持跨文件理解

"""

import tree_sitter_python as tspython

from tree_sitter import Language, Parser

parser = Parser(Language(tspython.language()))

code_graph = {

"files": {},

"imports": {},

"call_graph": {},

"type_defs": {}

}

for py_file in Path(repo_path).rglob("*.py"):

with open(py_file, 'r') as f:

code = f.read()

tree = parser.parse(bytes(code, 'utf8'))

root_node = tree.root_node

# 提取函数定义

functions = self._extract_functions(root_node, code)

code_graph["files"][str(py_file)] = functions

# 提取导入关系

imports = self._extract_imports(root_node, code)

code_graph["imports"][str(py_file)] = imports

return code_graph

def retrieve_context(self, query, code_graph, top_k=5):

"""

基于代码图谱检索相关上下文

"""

# 使用代码嵌入模型计算语义相似度

query_embed = self.code_embedder.encode(query)

candidates = []

for file_path, functions in code_graph["files"].items():

for func in functions:

func_embed = self.code_embedder.encode(func['body'])

similarity = cosine_similarity(query_embed, func_embed)

candidates.append({

"file": file_path,

"function": func['name'],

"body": func['body'],

"score": similarity

})

# 返回Top-K相关代码片段

return sorted(candidates, key=lambda x: x['score'], reverse=True)[:top_k]长上下文处理策略:

class LongContextCodeModel:

"""

处理100K+ token代码库的技术

"""

def __init__(self):

self.chunk_size = 8192

self.overlap = 1024

def sliding_window_encode(self, code_files):

"""

滑动窗口编码:将长代码分割为重叠块

"""

chunks = []

for file_path, content in code_files.items():

tokens = self.tokenizer.encode(content)

for i in range(0, len(tokens), self.chunk_size - self.overlap):

chunk = tokens[i:i + self.chunk_size]

chunks.append({

"file": file_path,

"start_line": self._token_to_line(content, i),

"tokens": chunk,

"is_overlap": i > 0

})

return chunks

def hierarchical_attention(self, chunks):

"""

分层注意力:先聚合文件级表示,再跨文件交互

"""

# Level 1: 块级编码

chunk_embeddings = []

for chunk in chunks:

emb = self.model.encode(chunk['tokens'])

chunk_embeddings.append(emb)

# Level 2: 文件级聚合(使用注意力池化)

file_embeddings = {}

for file_path, file_chunks in groupby(chunks, key=lambda x: x['file']):

chunk_embs = [chunk_embeddings[i] for i, c in enumerate(chunks) if c['file'] == file_path]

file_emb = self._attention_pooling(chunk_embs)

file_embeddings[file_path] = file_emb

# Level 3: 跨文件交互(稀疏注意力)

cross_file_context = self._sparse_cross_attention(file_embeddings)

return cross_file_context2.3 第三代:智能体编程系统(Agentic Coding)

代表系统:Devin、OpenHands (原OpenDevin)、GitHub Copilot Workspace

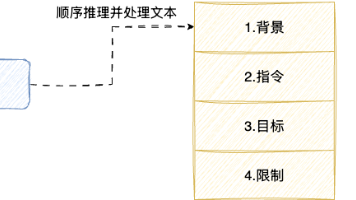

核心思想:将编程视为决策过程,通过规划-执行-观察-调整的循环,结合工具调用与环境交互,实现端到端软件开发。

Devin架构解析:

from dataclasses import dataclass

from typing import List, Dict, Optional

import subprocess

import docker

@dataclass

class CodeAction:

action_type: str # "write_file", "read_file", "execute_command", "search_code", "finish"

parameters: Dict

reasoning: str

class ProgrammingAgent:

"""

智能体编程系统的核心架构

"""

def __init__(self, llm_client, sandbox_config):

self.llm = llm_client

self.sandbox = DockerSandbox(sandbox_config) # 隔离执行环境

self.tools = self._init_tools()

self.memory = WorkingMemory()

self.planner = HierarchicalPlanner()

def _init_tools(self):

return {

"file_editor": FileEditorTool(),

"terminal": TerminalTool(self.sandbox),

"browser": BrowserTool(), # 查阅文档

"code_search": CodeSearchTool(),

"debugger": DebuggerTool(self.sandbox),

"git": GitTool()

}

async def solve_task(self, task_description: str, repo_url: Optional[str] = None):

"""

端到端任务解决流程

"""

# 1. 环境初始化

if repo_url:

await self.sandbox.clone_repository(repo_url)

repo_structure = await self._analyze_repository()

# 2. 任务规划(高层规划)

plan = await self.planner.create_plan(

task=task_description,

context=repo_structure

)

# 3. 执行循环

for step in plan.steps:

# 检索相关上下文

context = await self._retrieve_context(step)

# 生成行动

action = await self._decide_action(step, context)

# 执行并观察

observation = await self._execute_action(action)

# 更新记忆

self.memory.add_experience(step, action, observation)

# 动态重规划(如果执行失败)

if not observation.success:

plan = await self.planner.replan(step, observation)

# 4. 验证与提交

test_results = await self._run_tests()

if test_results.success:

await self._submit_solution()

return self.memory.get_solution()

async def _decide_action(self, step, context) -> CodeAction:

"""

基于ReAct范式的行动决策

"""

prompt = self._build_react_prompt(

task=step.description,

context=context,

history=self.memory.get_recent_history(5),

available_tools=list(self.tools.keys())

)

# 使用结构化生成约束输出格式

response = await self.llm.generate(

prompt,

response_format={

"type": "json_schema",

"schema": {

"reasoning": "str",

"action": "str",

"parameters": "dict"

}

}

)

return CodeAction(

action_type=response['action'],

parameters=response['parameters'],

reasoning=response['reasoning']

)

async def _execute_action(self, action: CodeAction) -> Dict:

"""

工具执行与沙箱隔离

"""

tool = self.tools.get(action.action_type)

if not tool:

return {"success": False, "error": f"Unknown tool: {action.action_type}"}

# 在隔离环境中执行

try:

result = await tool.run(**action.parameters)

# 如果是代码执行,捕获输出和副作用

if action.action_type == "execute_command":

result.update({

"exit_code": result.get('returncode'),

"stdout": result.get('stdout')[:1000], # 截断长输出

"stderr": result.get('stderr')

})

return {"success": True, "observation": result}

except Exception as e:

return {"success": False, "error": str(e)}

class HierarchicalPlanner:

"""

分层任务规划器:将复杂任务分解为可管理的子任务

"""

async def create_plan(self, task, context):

# 高层规划:识别主要阶段

high_level_plan = await self._high_level_planning(task, context)

# 细化每个阶段为具体步骤

detailed_steps = []

for phase in high_level_plan.phases:

steps = await self._elaborate_phase(phase, context)

detailed_steps.extend(steps)

return ExecutionPlan(steps=detailed_steps)

async def _high_level_planning(self, task, context):

"""

示例输出:

1. 理解需求与现有代码

2. 设计解决方案

3. 实现代码修改

4. 添加测试用例

5. 验证与调试

"""

prompt = f"""

任务:{task}

代码库结构:{context.structure_summary}

请制定高层执行计划,包含主要阶段。每个阶段应明确:

- 阶段目标

- 输入依赖

- 输出交付物

- 验证标准

"""

response = await self.llm.generate(prompt)

return self._parse_plan(response)三、代码生成的核心技术挑战

3.1 语法正确性保证

语法约束解码(Grammar-Constrained Decoding):

from typing import List, Set

import json

class GrammarConstrainedDecoder:

"""

使用上下文无关文法约束生成,确保代码语法正确

"""

def __init__(self, grammar_file):

self.grammar = self._load_grammar(grammar_file) # EBNF格式

self.parser = LR1Parser(self.grammar)

self.valid_next_tokens_cache = {}

def get_valid_next_tokens(self, partial_code: str) -> Set[int]:

"""

基于当前部分代码,计算语法允许的下一个token集合

"""

# 解析当前状态

try:

state_stack = self.parser.parse_partial(partial_code)

except ParseError:

# 如果当前代码已语法错误,放宽约束

return self.tokenizer.get_vocab().values()

# 计算可行转移

valid_tokens = set()

for state in state_stack:

for symbol, next_state in self.parser.transitions[state].items():

if symbol in self.terminal_tokens:

token_id = self.tokenizer.encode(symbol, add_special_tokens=False)[0]

valid_tokens.add(token_id)

return valid_tokens

def constrained_generate(self, prefix, max_length=100):

"""

约束生成过程

"""

generated = prefix

inputs = self.tokenizer(prefix, return_tensors="pt")

for _ in range(max_length):

# 获取语法允许的下一个token

valid_tokens = self.get_valid_next_tokens(generated)

# 模型前向传播

with torch.no_grad():

outputs = self.model(inputs.input_ids)

logits = outputs.logits[0, -1, :]

# 屏蔽无效token(设为-inf)

mask = torch.full_like(logits, float('-inf'))

mask[list(valid_tokens)] = 0

constrained_logits = logits + mask

# 采样

probs = torch.softmax(constrained_logits, dim=-1)

next_token = torch.multinomial(probs, num_samples=1)

# 更新

generated += self.tokenizer.decode(next_token)

inputs = self.tokenizer(generated, return_tensors="pt")

# 检查终止条件

if next_token == self.tokenizer.eos_token_id:

break

return generated3.2 语义正确性验证

执行反馈学习(Execution-Based Learning):

class ExecutionFeedbackTrainer:

"""

通过执行代码并观察结果来训练模型

"""

def __init__(self, model, execution_env):

self.model = model

self.env = execution_env

self.feedback_buffer = []

async def generate_with_verification(self, problem_description, test_cases):

"""

生成-验证-修正循环

"""

max_attempts = 5

for attempt in range(max_attempts):

# 生成候选代码

candidate = await self.model.generate(

prompt=problem_description,

context=self._build_feedback_context()

)

# 执行验证

test_results = await self._run_tests(candidate, test_cases)

if all(r['passed'] for r in test_results):

return candidate

# 分析失败原因

failure_analysis = self._analyze_failures(test_results)

# 记录反馈

self.feedback_buffer.append({

'attempt': attempt,

'code': candidate,

'failures': failure_analysis,

'stdout': [r['stdout'] for r in test_results if not r['passed']]

})

# 返回最佳尝试(通过最多测试的)

return self._select_best_attempt()

def _build_feedback_context(self):

"""

构建包含历史失败经验的提示

"""

if not self.feedback_buffer:

return ""

context = "历史尝试与失败分析:\n"

for fb in self.feedback_buffer[-3:]: # 最近3次

context += f"""

尝试 {fb['attempt']}:

代码:{fb['code'][:500]}...

失败原因:{fb['failures']}

错误输出:{fb['stdout'][:200]}

---

"""

return context3.3 长程依赖与跨文件理解

代码知识图谱构建:

import networkx as nx

from tree_sitter import Language, Parser

class CodeKnowledgeGraph:

"""

构建代码的语义知识图谱,支持跨文件分析

"""

def __init__(self, repo_path):

self.repo_path = repo_path

self.graph = nx.DiGraph()

self.parser = self._init_parser()

def build_graph(self):

"""

构建包含以下关系的图谱:

- 函数定义与调用

- 类继承关系

- 模块导入关系

- 变量定义与使用

"""

for file_path in self._get_source_files():

tree = self._parse_file(file_path)

self._extract_definitions(tree, file_path)

self._extract_relationships(tree, file_path)

return self.graph

def _extract_definitions(self, tree, file_path):

"""

提取所有定义节点

"""

query = """

(function_definition

name: (identifier) @func_name) @func_def

(class_definition

name: (identifier) @class_name) @class_def

(import_statement

name: (dotted_name) @import_name) @import_stmt

"""

captures = self.parser.query(query).captures(tree.root_node)

for node, tag in captures:

if tag == "func_name":

node_id = f"{file_path}::{node.text.decode()}"

self.graph.add_node(

node_id,

type="function",

file=file_path,

line=node.start_point[0],

signature=self._extract_signature(node)

)

elif tag == "class_name":

node_id = f"{file_path}::{node.text.decode()}"

self.graph.add_node(

node_id,

type="class",

file=file_path,

line=node.start_point[0]

)

def query_context(self, cursor_position, radius=2):

"""

基于光标位置检索相关上下文

"""

# 找到当前文件和函数

current_file = cursor_position.file

current_function = self._locate_function(cursor_position)

# BFS遍历获取依赖上下文

context_nodes = []

# 向上游追溯(谁调用了当前函数)

callers = list(self.graph.predecessors(current_function))

context_nodes.extend(callers[:3]) # 最多3个调用者

# 向下游追溯(当前函数调用了谁)

callees = list(self.graph.successors(current_function))

context_nodes.extend(callees[:5]) # 最多5个被调用者

# 获取相关类型定义

related_types = self._get_related_types(current_function)

context_nodes.extend(related_types)

# 按拓扑排序组织上下文

subgraph = self.graph.subgraph(context_nodes)

sorted_context = list(nx.topological_sort(subgraph))

return self._format_context(sorted_context)

def _format_context(self, node_ids):

"""

将节点格式化为LLM可理解的文本

"""

context_text = []

for node_id in node_ids:

node_data = self.graph.nodes[node_id]

if node_data['type'] == 'function':

content = f"""

// File: {node_data['file']}

{node_data['signature']} {{

{self._get_function_body(node_id)[:500]}

}}

"""

context_text.append(content)

return "\n".join(context_text)四、企业级代码生成系统架构

4.1 多模型路由策略

class ModelRouter:

"""

根据任务复杂度路由到不同规模的模型

"""

def __init__(self):

self.models = {

"fast": CodeLlama-7B(), # 本地部署,低延迟

"balanced": CodeLlama-13B(), # 平衡性能

"powerful": GPT-4(), # 云端API,复杂任务

"agent": DevinAgent() # 智能体模式,端到端任务

}

def route(self, request: CodeRequest) -> ModelInstance:

"""

基于任务特征选择模型

"""

features = self._extract_features(request)

# 简单补全 -> 小模型

if features['task_type'] == 'inline_completion' and features['context_length'] < 1000:

return self.models['fast']

# 跨文件重构 -> 大模型

if features['cross_file_references'] > 3:

return self.models['powerful']

# 生成测试用例 -> 中等模型

if features['task_type'] == 'test_generation':

return self.models['balanced']

# 复杂需求实现 -> 智能体

if features['requires_multiple_files'] or features['has_ambiguous_requirements']:

return self.models['agent']

def _extract_features(self, request) -> Dict:

return {

'task_type': self._classify_task(request.prompt),

'context_length': len(request.context_tokens),

'cross_file_references': len(request.referenced_files),

'requires_multiple_files': request.estimated_files > 1,

'has_ambiguous_requirements': self._check_ambiguity(request.prompt)

}4.2 检索增强生成(RAG) for Code

class CodeRAG:

"""

结合代码检索的生成系统

"""

def __init__(self):

self.code_embedder = CodeEmbedder() # 代码专用嵌入模型

self.vector_store = Milvus()

self.ast_index = ASTIndex() # 基于AST的结构化索引

async def retrieve(self, query: str, current_file: str, top_k=5):

"""

多路召回策略

"""

results = []

# 1. 语义检索(向量相似度)

query_embed = self.code_embedder.encode(query)

semantic_results = self.vector_store.search(query_embed, top_k=top_k)

results.extend(semantic_results)

# 2. 符号检索(精确匹配函数名、类名)

symbols = self._extract_symbols(query)

symbol_results = self.ast_index.lookup(symbols)

results.extend(symbol_results)

# 3. 依赖检索(当前文件的导入关系)

deps = self._get_dependencies(current_file)

dep_results = self._retrieve_by_files(deps)

results.extend(dep_results)

# 4. 去重与重排序

unique_results = self._deduplicate(results)

reranked = self._cross_encoder_rerank(query, unique_results)

return reranked[:top_k]

def _cross_encoder_rerank(self, query, candidates):

"""

使用交叉编码器精排

"""

pairs = [(query, c['content']) for c in candidates]

scores = self.cross_encoder.predict(pairs)

for candidate, score in zip(candidates, scores):

candidate['relevance_score'] = score

return sorted(candidates, key=lambda x: x['relevance_score'], reverse=True)4.3 安全与合规

class SecureCodeExecutor:

"""

安全的代码执行环境

"""

def __init__(self):

self.docker_client = docker.from_env()

self.image = "sandbox-python:3.9"

self.resource_limits = {

'cpu_quota': 100000, # 1 CPU

'mem_limit': '512m',

'timeout': 30 # 秒

}

async def execute(self, code: str, test_cases: List[Dict]):

"""

在隔离容器中执行不可信代码

"""

container = self.docker_client.containers.run(

self.image,

command="python /app/solution.py",

volumes={self._create_temp_file(code): {'bind': '/app/solution.py', 'mode': 'ro'}},

network_disabled=True, # 禁止网络

**self.resource_limits,

detach=True

)

try:

result = container.wait(timeout=self.resource_limits['timeout'])

logs = container.logs().decode('utf-8')

return {

'exit_code': result['StatusCode'],

'stdout': logs,

'success': result['StatusCode'] == 0

}

except Exception as e:

container.kill()

return {'success': False, 'error': str(e)}

finally:

container.remove(force=True)

def scan_security(self, code: str) -> List[Dict]:

"""

静态安全扫描

"""

issues = []

# 检测危险函数

dangerous_patterns = [

(r'eval\s*\(', '使用eval()存在代码注入风险'),

(r'exec\s*\(', '使用exec()存在代码注入风险'),

(r'subprocess\..*shell\s*=\s*True', 'subprocess启用shell=True存在命令注入风险'),

(r'__import__\s*\(', '动态导入可能被用于加载恶意模块'),

(r'open\s*\(.*,\s*["\']w', '文件写入操作需确认路径安全'),

]

for pattern, description in dangerous_patterns:

if re.search(pattern, code):

issues.append({

'severity': 'high',

'description': description,

'line': self._find_line(code, pattern)

})

return issues五、2025年代码生成技术趋势

5.1 程序合成(Program Synthesis)的崛起

从自然语言到可执行代码的形式化综合:

-

规格驱动生成:基于形式化规格(如Z3约束)生成保证正确的代码

-

示例编程(PBE):通过输入-输出示例推断程序逻辑

-

神经-符号结合:LLM生成候选,符号验证器保证正确性

5.2 多模态编程

支持草图转代码、语音编程、视频理解需求:

# 未来可能的交互方式

agent.implement(

sketch_image="ui_mockup.png", # 手绘界面草图

voice_description="这是一个登录页面...", # 语音描述

video_demo="user_flow.mp4" # 操作演示视频

)5.3 持续学习与个性化

-

代码风格学习:从开发者历史代码学习个人编码风格

-

私有知识库:适配企业特定框架与内部API

-

增量更新:模型持续从新代码提交中学习

六、结语

AI代码生成正在从"辅助工具"进化为"编程搭档"。从Codex的简单补全到Devin的端到端开发,技术演进的核心是从语法模仿到语义理解,从单文件操作到项目级规划。

对于开发者而言,这意味着:

-

能力边界重新定义:聚焦架构设计与复杂问题解决,将编码实现交给AI

-

新技能需求:提示工程、AI代码审查、人机协作流程设计

-

质量与安全的挑战:AI生成代码的测试覆盖与安全审计成为关键

当AI能够理解需求、设计架构、编写代码、调试测试,软件工程的范式将被彻底重塑。而掌握这些AI编程工具的开发者,将成为新时代的"10倍工程师"。

更多推荐

已为社区贡献8条内容

已为社区贡献8条内容

所有评论(0)